Takeo Kanade, engineer: ‘Artificial vision will bring teleportation, but without decomposing your body and shipping it another place’

The Japanese researcher discusses the use of robots in surgical operations, virtualized reality and why we must be smarter and faster than malicious technologies

Takeo Kanade (Hyōgo, Japan, 78 years old) speaks fluently about artificial vision, a field of research he has been working on for more than 40 years. This scientific discipline allows you to see a soccer match from the point of view of the ball or a tennis match through a hawk’s eye. The fundamental algorithms that Kanade has developed with his colleague Bruce Lucas, called the Lucas-Kanade method, help computers and robots understand moving images. His work has also helped improve robotic surgery, self-driving cars and facial recognition. “In the future, robots will be better than humans, in one way or another,” he says.

The researcher received his PhD in Electrical Engineering from Kyoto University in 1974. He discovered his passion for engineering when he went fishing at the age of five and made his own hook. He is now a professor of Computer Science and Robotics at Carnegie Mellon University in Pittsburgh and founder of the Quality of Life Technology Center, which he directed between 2006 and 2012.

Question. Do you think robotic vision will one day match human vision?

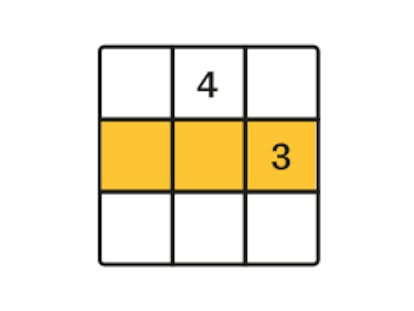

Answer. Yes, and at some point it may be better than human vision. In fact, in some areas it is already better, for example in computer facial recognition. For a long time it was thought that humans had a great advantage, as we are good at recognizing people we know. However, when we meet a person in a very unexpected environment, we quite often miss them.

Q. Which of the two is least likely to fail?

A. The human being. Self-driving cars can see up to 200 meters in all directions and recognize the location of other cars, pedestrians and bicycles very precisely, but we have a better understanding. We have some expectations about what is happening in the car in front of you. You can also recognize if you are driving near a school, and you know there’s a chance children may cross the road. Computers are trying to get to that level of understanding, but for now it’s not that good; and it is critical to avoid accidents.

Q. Are we going to have 100% autonomous driving in the future?

A. Within 10 years or even less. However, people have to be convinced. It is like the car, which we use, although it causes accidents and deaths. But the benefits of a car are so great that, as a society, we accept it.

Q. In the world of artificial vision, what are the upcoming challenges?

A. Can you be in a world that is mapped from the real world? That’s the next level. I call it virtualized reality. Virtual reality now is not virtual: it is a real world that is virtualized. The next thing is to interact with the environment and others who are virtually there. In this case, when there is a mirror in that virtual reality, you will be able to virtually see yourself in it. Another challenge is teleportation, but without decomposing your body and shipping it to another place, like in the movie Star Trek. It is done with tools, like drones, that give you a reaction force through your body to your legs so that you can teleport, visually and acoustically, and physically interact in real time.

Q. How can you prevent the malicious use of deepfakes?

A. I feel partially responsible for it. In 2010, I made a video of president Obama speaking in Japanese with images generated from my face. I thought it was a joke video. You can’t fight deepfakes, the only thing that prevents it is our integrity.

Q. But if technology can turn against us...

A. It is used for the purpose of deception, but the technology cannot know what its objective is. A type of technology can be created and used for purposes other than those for which it was developed. You have to be smarter, faster than it and be informed. The watermark, for example, once you do that and it is known, then there is an instant way of erasing it.

Q. How can computer vision be used to improve the quality of life of people with disabilities?

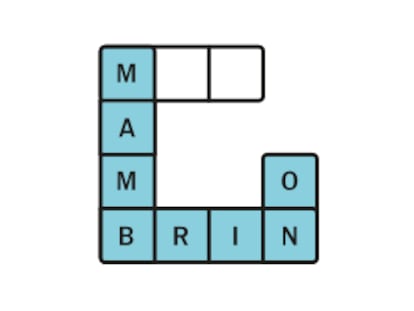

A. It is about developing quality of life technology, as we do in our center. Its essence is to increase the independence of people with disabilities or older people. It is not about robots doing everything, but the opposite. My formula for the perfect robot equals what you want to do minus what you can do. That is, compensate for the part that the human cannot do so that it can be done together with the robot. Furthermore, in cases of rehabilitation or education, the perfect robot must do a little less so that the person regains motivation and ability.

Q. How have your works contributed to surgical precision?

A. Surgeon robots can use more developed sensors than human surgeons. Human sensors are very limited, we do not have acoustic sensors or multimodal sensors. Before a surgical operation, robots can, for example, detect the location of a tumor, its shape or size, with X-rays or an MRI, and at the time of surgery, they use imaging sensors.

Q. How has origami helped your career?

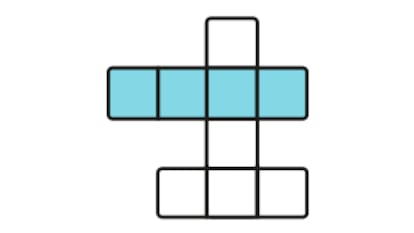

A. The essence of my theory, A Theory of Origami World, is that the perception of the three-dimensional shape of an image must derive from a mathematical explanation and not from the result of learning. If, for example, you draw a box, there are five more possible shapes that are generated with exactly the same image, but are different from the box. When I give a talk, for example, I joke with the audience that the lecture hall and the building could be different shapes and the audience looks around and imagines it.

Sign up for our weekly newsletter to get more English-language news coverage from EL PAÍS USA Edition